A New Jewishness Is Being Born Before Our Eyes A New Jewishness Is Being Born Before Our Eyes

The future of our people is being written on campuses and in the streets. Thousands of Jews of all ages are creating something better than what we inherited.

The Student Protesters Are Demonstrating Their Bravery, Not Antisemitism The Student Protesters Are Demonstrating Their Bravery, Not Antisemitism

The real threat to American Jews comes not from students but from the MAGA Republicans who are shouting about antisemitism the loudest.

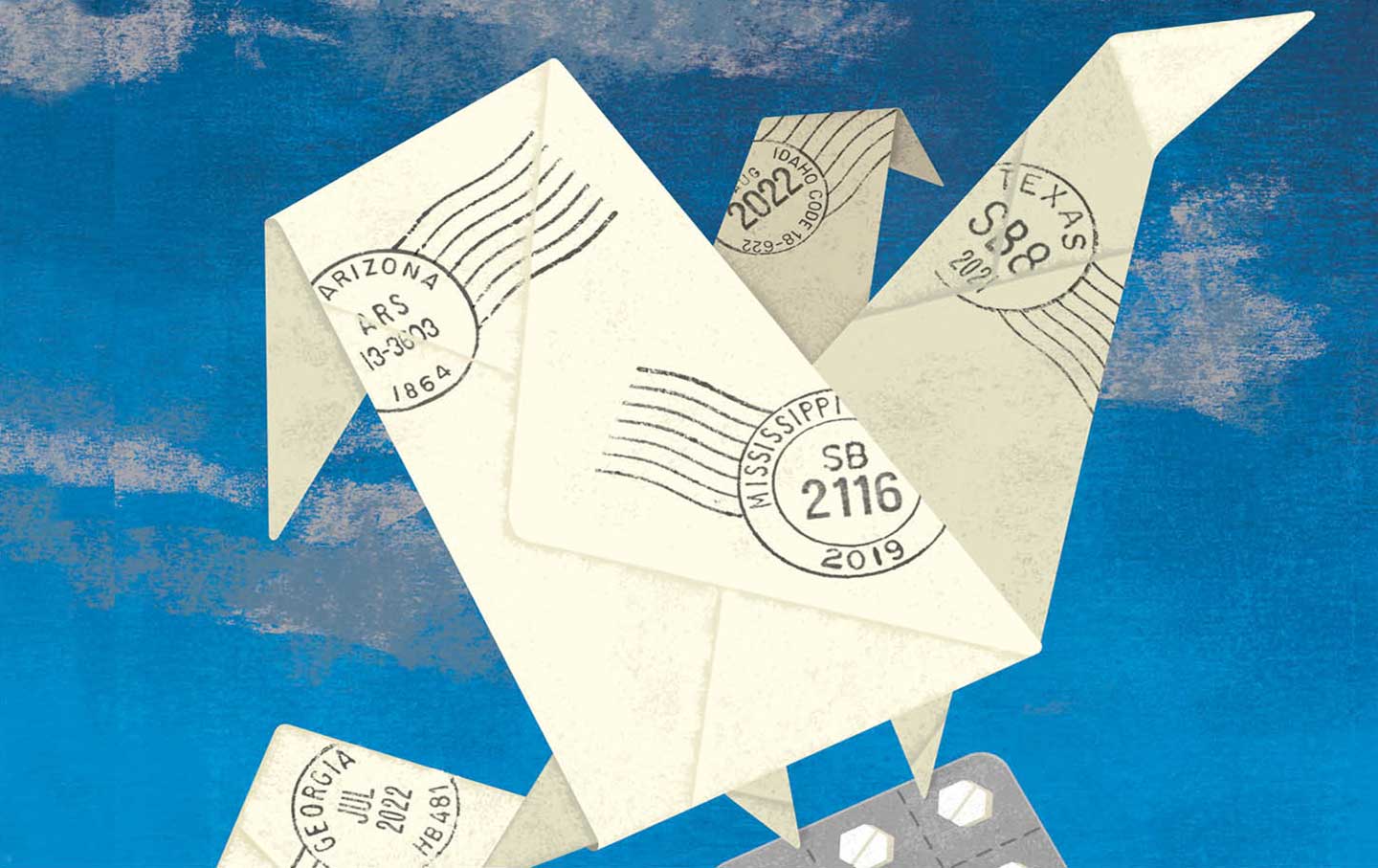

The Supreme Court Rules That Cops Can Steal Your Stuff—as They Always Have The Supreme Court Rules That Cops Can Steal Your Stuff—as They Always Have

By a 6-3 vote, the conservative justices decided that there is no need for the state to provide a preliminary hearing in civil forfeiture cases.

Palestine Is Everywhere, and It Is Making Us More Free Palestine Is Everywhere, and It Is Making Us More Free

More letters from the apocalypse.

Latest

The Past, Present, and Future of the War In Sudan The Past, Present, and Future of the War In Sudan

May 10, 2024 / American Prestige / Derek Davison and Daniel Bessner

Famine as Weapon of Mass Destruction Famine as Weapon of Mass Destruction

May 10, 2024 / Steve Brodner

Antisemitism, Then and Now: A Guide for the Perplexed Antisemitism, Then and Now: A Guide for the Perplexed

May 10, 2024 / Omer Bartov

Students at Universities Across Jordan Are Protesting for Gaza Students at Universities Across Jordan Are Protesting for Gaza

May 10, 2024 / StudentNation / Esther Sun

Racism Can Kill Black People Even When a Black Finger Pulls the Trigger Racism Can Kill Black People Even When a Black Finger Pulls the Trigger

May 10, 2024 / Mark Hertsgaard

How Robert F. Kennedy Jr.’s Brain Became the Diet of Worms How Robert F. Kennedy Jr.’s Brain Became the Diet of Worms

May 9, 2024 / Jeet Heer

Nation Voices

“swipe left below to view more authors”Swipe →Israel-Gaza War

A New Jewishness Is Being Born Before Our Eyes A New Jewishness Is Being Born Before Our Eyes

The future of our people is being written on campuses and in the streets. Thousands of Jews of all ages are creating something better than what we inherited.

Rafah Is in Panic as the Israeli Invasion Begins Rafah Is in Panic as the Israeli Invasion Begins

With Israeli forces entering Gaza’s southernmost city, scenes from the Nakba are being repeated in the strip’s last refuge.

It’s Time to Stop Ignoring the Sexual Violence Happening in Gaza It’s Time to Stop Ignoring the Sexual Violence Happening in Gaza

As long as our outrage is selectively assigned only to specific victims in specific contexts, we are lying to ourselves about the reality of violence in war zones.

Popular

“swipe left below to view more authors”Swipe →-

The Supreme Court Rules That Cops Can Steal Your Stuff—as They Always Have The Supreme Court Rules That Cops Can Steal Your Stuff—as They Always Have

-

How Robert F. Kennedy Jr.’s Brain Became the Diet of Worms How Robert F. Kennedy Jr.’s Brain Became the Diet of Worms

-

A New Jewishness Is Being Born Before Our Eyes A New Jewishness Is Being Born Before Our Eyes

-

Antisemitism, Then and Now: A Guide for the Perplexed Antisemitism, Then and Now: A Guide for the Perplexed

From the Archive

November 5, 2014: ‘Toward a New Beginning’: Lessons and Parallels From the GOP Rout of 1946 ‘Toward a New Beginning’: Lessons and Parallels From the GOP Rout of 1946

“Let us not fool ourselves in this hour of appraisal…”

Politics

Racism Can Kill Black People Even When a Black Finger Pulls the Trigger Racism Can Kill Black People Even When a Black Finger Pulls the Trigger

Wounded in the biggest mass shooting in modern New Orleans history, African American activist Deborah Cotton embodied a true-crime parable of America’s Obama-Trump era.

The Supreme Court Rules That Cops Can Steal Your Stuff—as They Always Have The Supreme Court Rules That Cops Can Steal Your Stuff—as They Always Have

By a 6-3 vote, the conservative justices decided that there is no need for the state to provide a preliminary hearing in civil forfeiture cases.

Universities Like Mine Are Providing an Authoritarian Blueprint for Trump Universities Like Mine Are Providing an Authoritarian Blueprint for Trump

The potential next president and his allies are looking at the campus-led crackdown on free speech as a perfect dress rehearsal.

Books & the Arts

Vinson Cunningham’s Searching Novel of Faith and Politics Vinson Cunningham’s Searching Novel of Faith and Politics

In Great Expectations, Cunningham examines the hope and aspirations of the Obama generation.

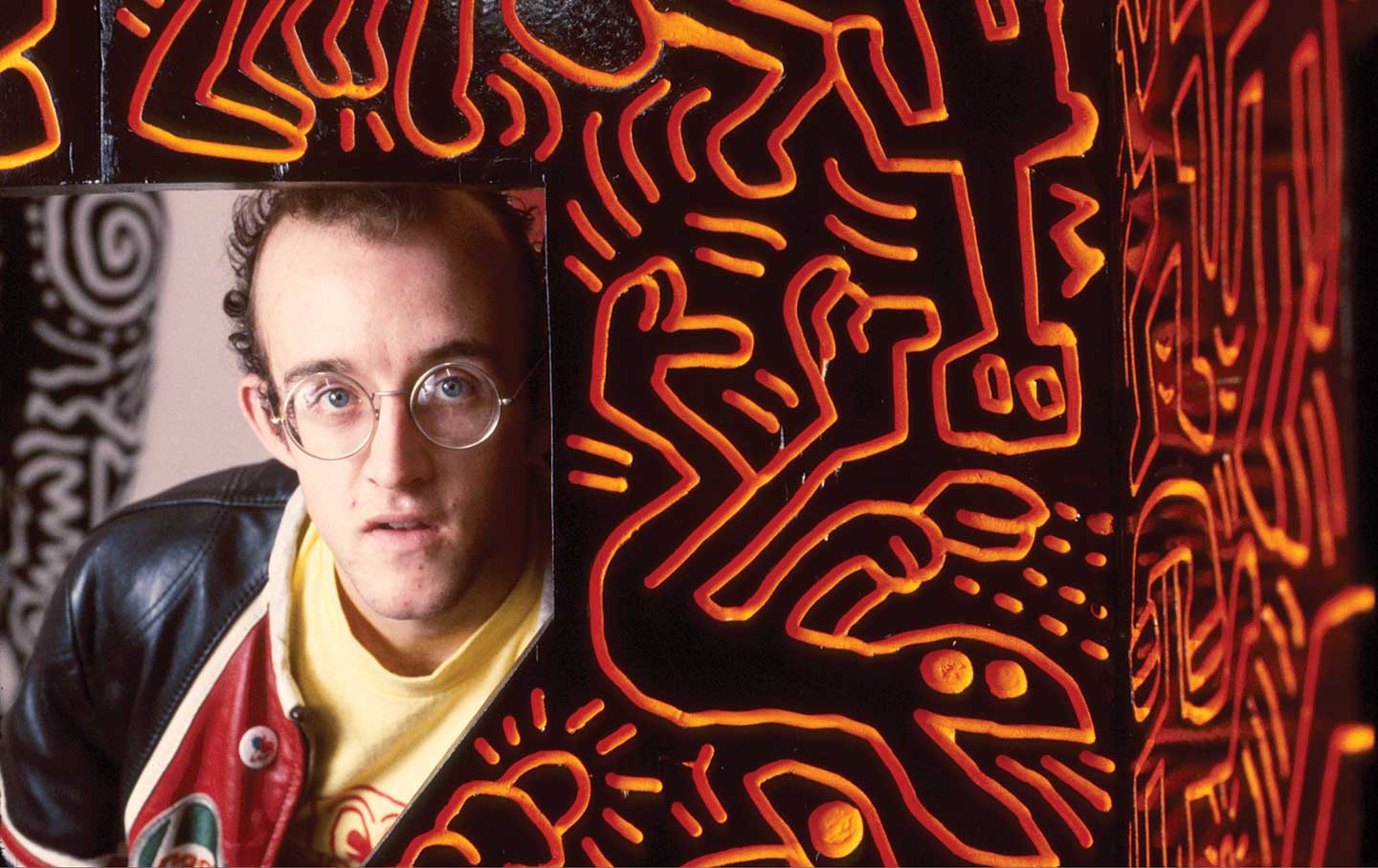

Keith Haring and the Downtown Art Revolution Keith Haring and the Downtown Art Revolution

A new biography tells the story of not only Haring’s life but also the exhilarating world of New York art in the 1970s and 80s.

Nell Irvin Painter’s Chronicles of Freedom Nell Irvin Painter’s Chronicles of Freedom

A new career-spanning book offers a portrait of Painter’s career as a historian, essayist, and most recently visual artist.

Features

“swipe left below to view more features”Swipe →Latest Podcasts

The Nation produces various podcasts, including Contempt of Court with Elie Mystal, Start Making Sense with Jon Wiener, Time of Monsters with Jeet Heer, and Edge of Sports with Dave Zirin.

SubscribeThe Past, Present, and Future of the War In Sudan The Past, Present, and Future of the War In Sudan

Podcast / American Prestige

Tesla Is Choosing Hype Over Substance Tesla Is Choosing Hype Over Substance

Podcast / Tech Won’t Save Us

The Mob Attack on UCLA’s Protest Encampment—Plus Israel, Hamas, and Sexual Violence The Mob Attack on UCLA’s Protest Encampment—Plus Israel, Hamas, and Sexual Violence

Podcast / Start Making Sense