Books & the Arts

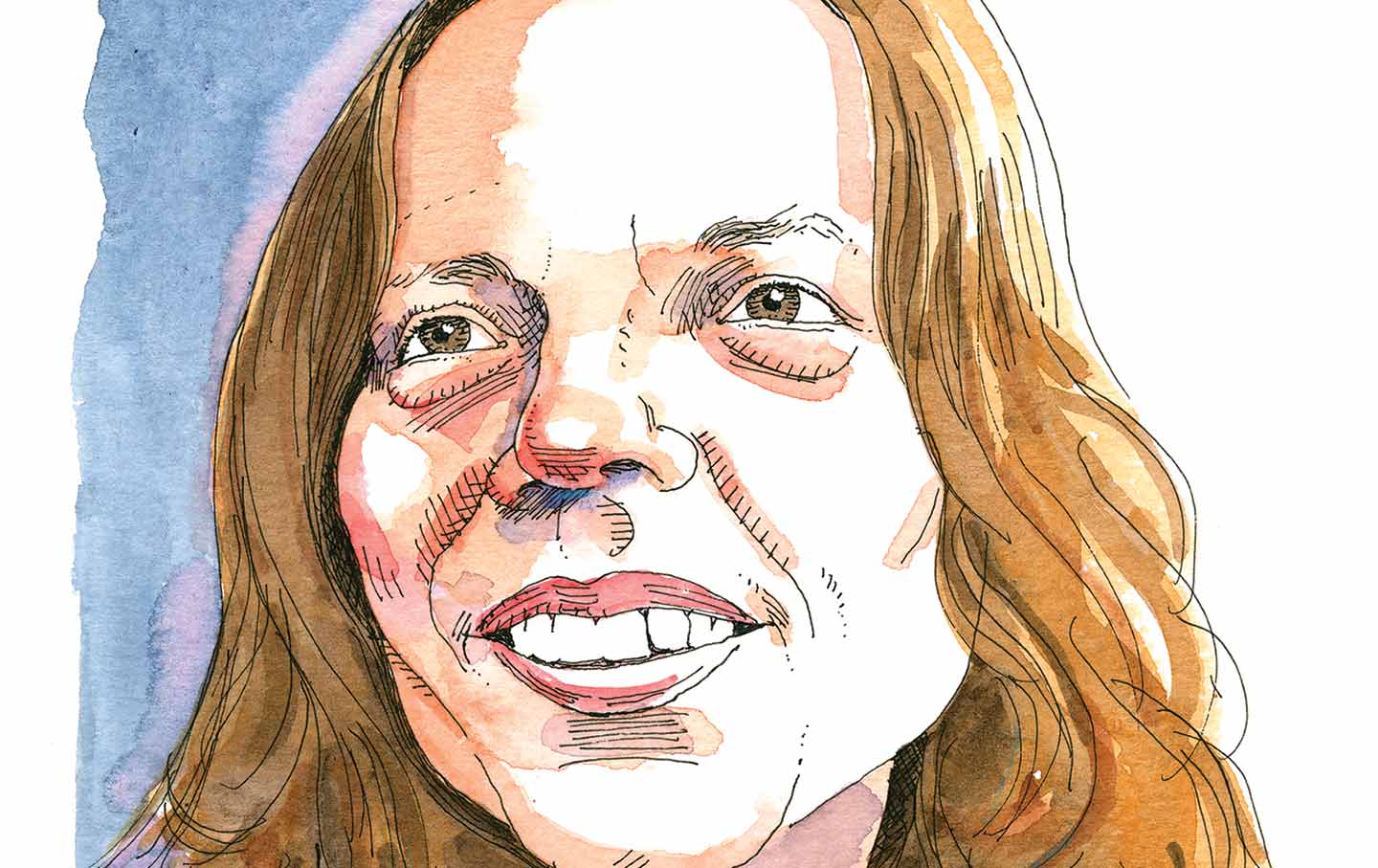

Lauren Oyler and the Critic in the Internet Age Lauren Oyler and the Critic in the Internet Age

In No Judgment, the novelist and critic explores the perilous activity of literary criticism in the era of social media.

How Did Joe Biden’s Foreign Policy Go So Off Course? How Did Joe Biden’s Foreign Policy Go So Off Course?

The president set out to chart a more pacific and humane foreign policy after the Trump years but at some point he and his team of advisers lost the plot.

The End of “Curb Your Enthusiasm” Marks the End of an Era The End of “Curb Your Enthusiasm” Marks the End of an Era

Larry David is the last of his kind—and in several ways.

The Cosmopolitan Modernism of the Harlem Renaissance The Cosmopolitan Modernism of the Harlem Renaissance

A new exhibition at the Metropolitan Museum of Art explores the world-spanning art of the Harlem Renaissance.

From the Magazine

What Happened to the 21st-Century City? What Happened to the 21st-Century City?

And how we can save it.

Who Is In Charge in the Biden White House? Who Is In Charge in the Biden White House?

In The Last Politician, Franklin Foer offers a portrait of an administration at odds with itself.

The Era of Nicki Minaj The Era of Nicki Minaj

How the queen of rap revolutionized American music.

Literary Criticism

Isabella Hammad’s Novel of Art and Exile in Palestine Isabella Hammad’s Novel of Art and Exile in Palestine

Enter the Ghost looks at a group of Palestinians who try to put on a production of Hamlet in the occupied West Bank.

Olga Ravn’s Novel of Parenting and Its Discontents Olga Ravn’s Novel of Parenting and Its Discontents

In My Work, the novelist examines the trials and tribulations of being a mother.

The Magic of Reading Bernard Malamud The Magic of Reading Bernard Malamud

His work, unlike that of Bellow or Roth, focused on the lives of often impoverished Jews in Brooklyn and the Bronx and bestowed on them a literary magic.

History & Politics

What Happened to the Democratic Majority? What Happened to the Democratic Majority?

Today the march of class dealignment feels like an inexorable fact of American political life. But is it?

The Latin School Teacher Who Made Classics Popular The Latin School Teacher Who Made Classics Popular

A new biography of Edith Hamilton tells the story of how and why ancient literature became widely read in the United States.

Sara Ahmed and the Joys of Killjoy Feminism Sara Ahmed and the Joys of Killjoy Feminism

To be a feminist killjoy means celebrating a different kind of joy, the joy that comes from doing critical damage to what damages so much of the world.

Art & Architecture

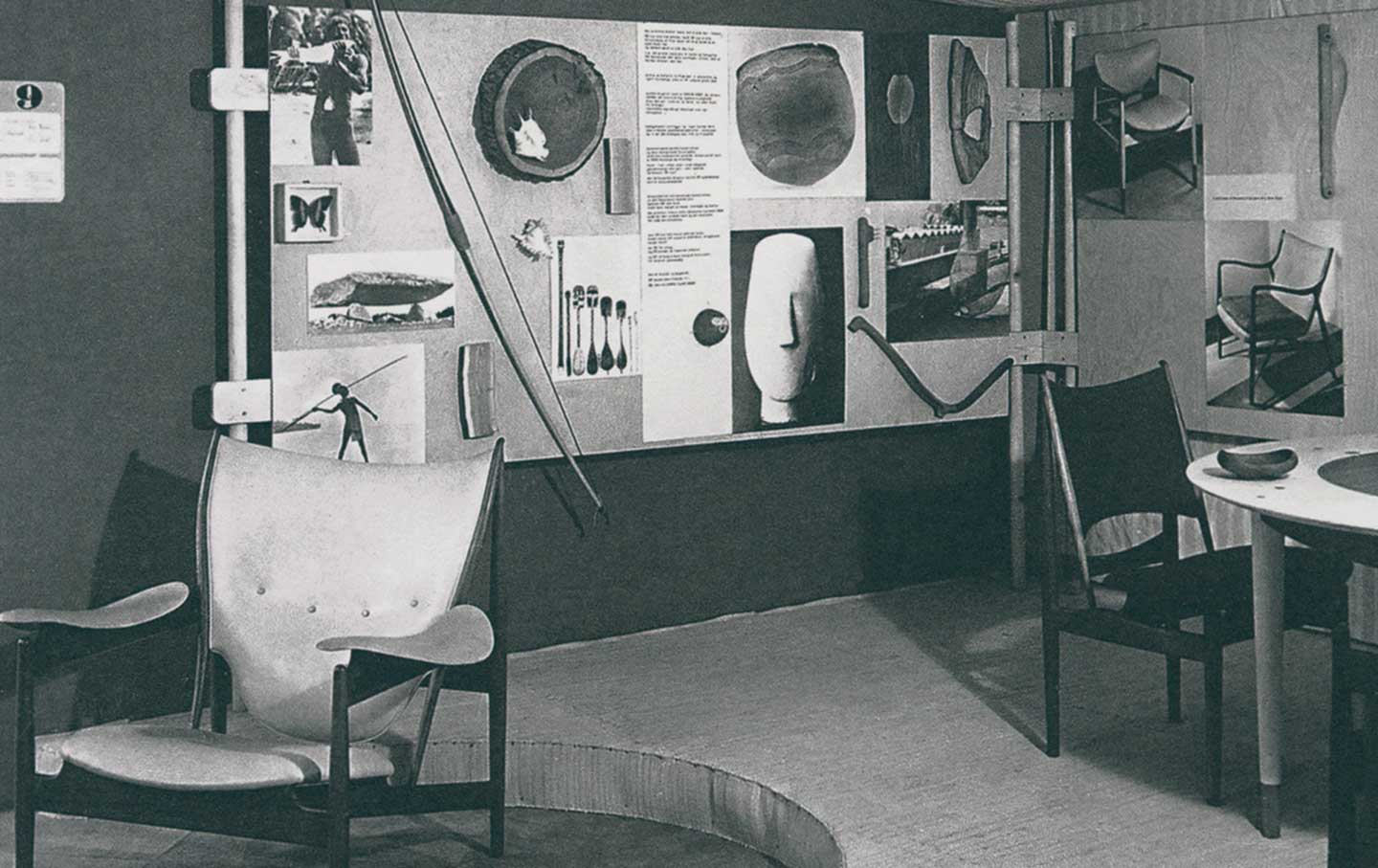

How Did Americans Come to Love “Mid-Century Modern”? How Did Americans Come to Love “Mid-Century Modern”?

Solving the riddle of America’s obsession with postwar design and furniture.

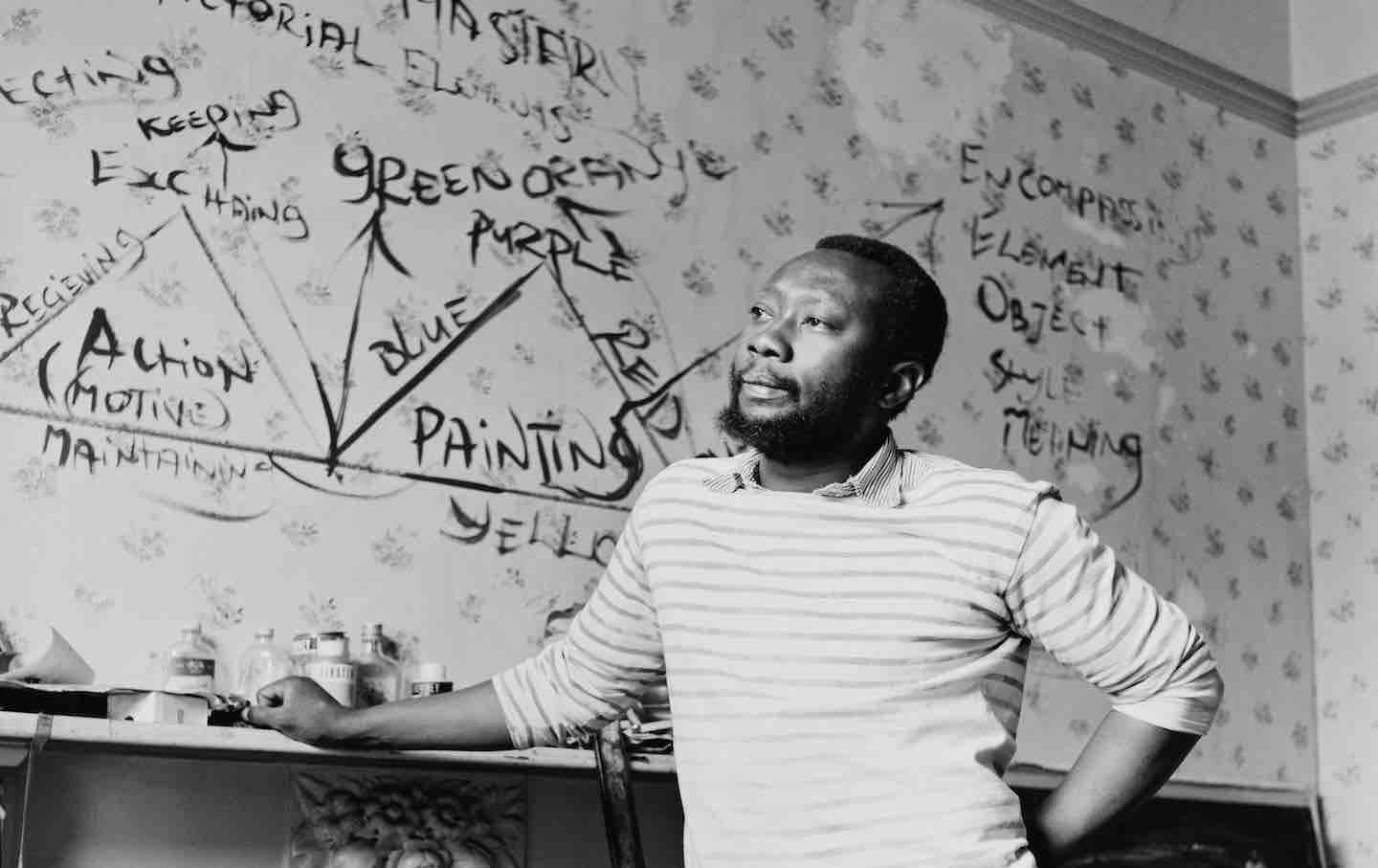

“The Subject of Painting Is Paint”: On Frank Bowling “The Subject of Painting Is Paint”: On Frank Bowling

The British artist’s work challenges all notions you might have about the relationship between politics and aesthetics.

A Hidden History of Europe’s Pre-Modernist Women Artists A Hidden History of Europe’s Pre-Modernist Women Artists

A recent exhibition documenting four centuries of art from female painters and illustrators provides a new way of looking at an era of art history where women are often left out.

Film & Television

The Genius of Nuri Bilge Ceylan The Genius of Nuri Bilge Ceylan

About Dry Grasses is long, dense, elliptical—and brilliant.

The Metaphysical Horror of “The Curse” The Metaphysical Horror of “The Curse”

From its first moments to its antic end, the series exposes its viewers to an abundance of anxious perturbation but it does something else too: It reveals the absurdity all around…

The Odd Couples of “Drive-Away Dolls” The Odd Couples of “Drive-Away Dolls”

Ethan Coen’s horny homage to American film history’s many strains of queer comedy highlights the collaborative aspect inherent in his project as a director.

Latest in Books & the Arts

Want to Fight Mass Incarceration? Start With Your Local Jail Want to Fight Mass Incarceration? Start With Your Local Jail

A new collection of essays from academics and activists devoted to prison abolition focuses on the quiet but rapid expansion of the carceral system in small towns and municipaliti…

Apr 25, 2024 / Books & the Arts / Jarrod Shanahan

Is Comedy Really an Art? Is Comedy Really an Art?

A history of comedy’s last three decades of pop culture dominance argues that it is among the consequential American art forms.

Apr 24, 2024 / Books & the Arts / Ginny Hogan

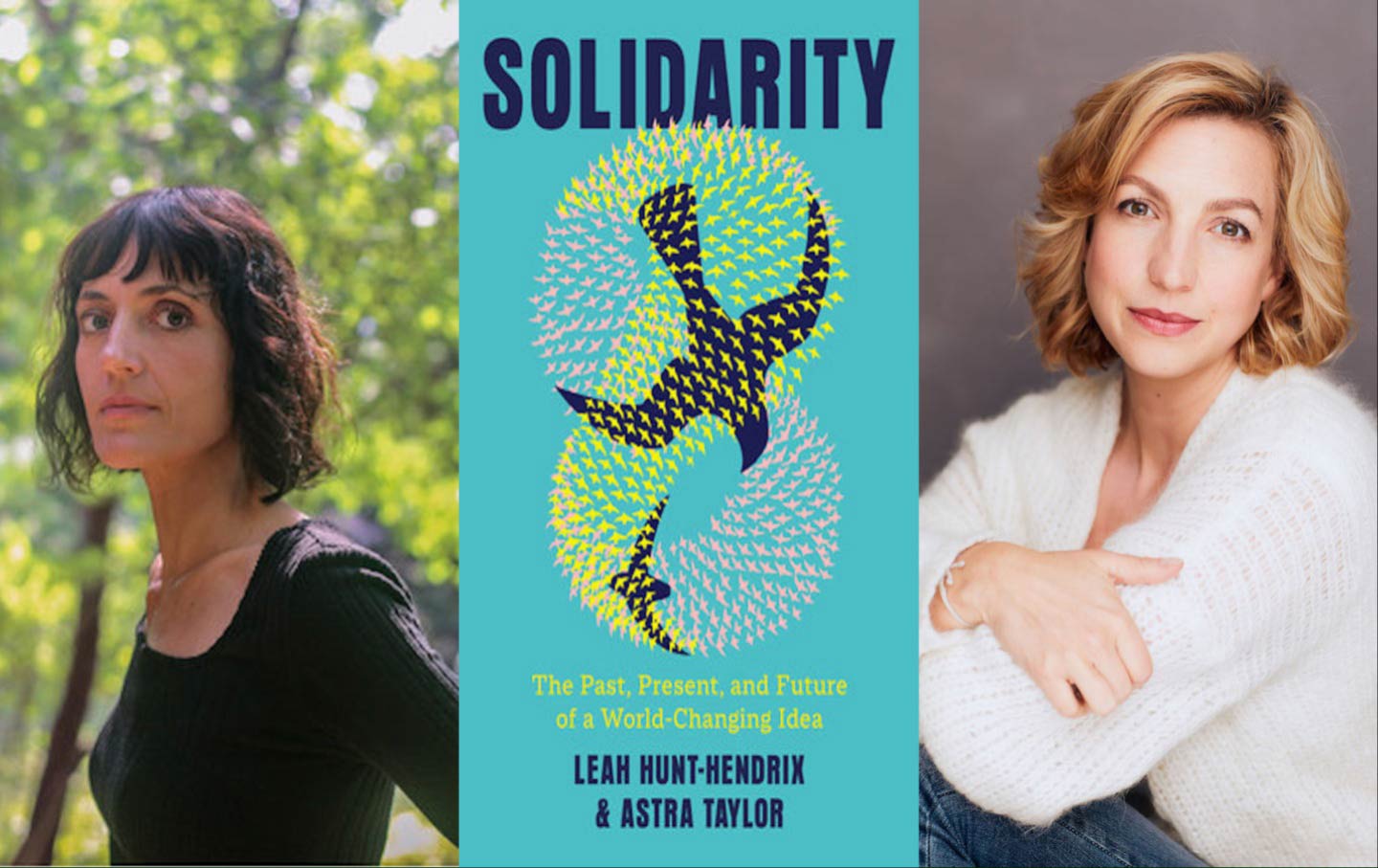

Talking “Solidarity” With Astra Taylor and Leah Hunt-Hendrix Talking “Solidarity” With Astra Taylor and Leah Hunt-Hendrix

A conversation with the activists and writers about their wide-ranging history of the politics of the common good and togetherness.

Apr 23, 2024 / Books & the Arts / Daniel Steinmetz-Jenkins

The Education Factory The Education Factory

By looking at the labor history of academia, you can see the roots of a crisis in higher education that has been decades in the making.

Apr 22, 2024 / Books & the Arts / Erik Baker

Pacita Abad Wove the Women of the World Together Pacita Abad Wove the Women of the World Together

Her art integrated painting, quilting, and the assemblage of Indigenous practices from around the globe to forge solidarity.

Apr 18, 2024 / Books & the Arts / Jasmine Liu

The Many Evolutions of Kid Cudi The Many Evolutions of Kid Cudi

In Insano, the rapper and hip-hop artist comes back down to earth.

Apr 17, 2024 / Books & the Arts / Bijan Stephen