Can the EU’s Digital Services Act Inspire US Tech Regulation?

“The effect of these large, European digital laws is going to be felt well beyond the borders of Europe.”

EU Commission Vice President Margrethe Vestager during a press conference on the Digital Services Act.

(Alexandros Michailidis / Getty)In late August, the Digital Services Act went into effect in the European Union. The act, along with the Digital Markets Act, aims to “create a safer digital space,” affecting the transparency, content monitoring, and monopolistic practices of tech companies.

The Digital Services Act serves as an update and replacement for the EU’s e-Commerce Directive, originally adopted in 2000, which set a precedent for online regulation: a basic framework for what consumer information online providers needed to make available, rules on online contracting and on commercial communications like advertisements.

Since 2000, though, the role online platforms play in our lives has grown in unimaginable ways—as have the platforms themselves.

That’s where the DSA comes in. The European Commission, led by commissioners Margrethe Vestager and Thierry Breton, originally proposed the acts in December 2020. Now, the DSA will shift new responsibilities for illegal goods to sales platforms, such as Amazon. These companies will have to provide ways for consumers to flag these goods and identify their sellers. At the same time, social media companies will have to implement similar systems for illegal or harmful content, along with bans on targeted advertising toward children or around certain personal categories, such as sexuality, ethnicity, and political views. The act also adds other transparency measures, from platforms’ recommendation algorithms to risk management systems.

While these rules are only required for EU citizens, this transnational legislation will have international effects. The majority of the companies affected by these regulations are headquartered in the United States. Several companies, including Meta and Microsoft, have released statements explaining the changes they’ll make—making clear that they will apply to EU citizens only. Meta, for example, plans to increase transparency by explaining its content-ranking algorithms and make reporting tools easier to access and provide users with the opportunity to turn off recommendation AI entirely.

But Americans will still be affected—even if indirectly—said Robert Gulotty, associate professor of international political economy at the University of Chicago. “Most of this stuff will be affecting things that you won’t see, like the companies that would have never been there, but you will never see emerge the products that they would have designed,” Gulotty said. “Most of it’s going to be the absence of things, as opposed to the presence of things.”

According to Samuel Woolley, assistant professor of journalism and project director for propaganda research at the Center for Media Engagement at University of Texas at Austin, “the effect of these large, European digital laws is going to be felt well beyond the borders of Europe.” Companies operating within the EU will have to hire workers to comply with regulations, a cost he expects will be passed along to consumers.

But the United States is still involved—and in ways deeper than you might expect. The Federal Trade Commission reportedly sent officials overseas to help with the Digital Services Act’s implementation and enforcement, despite the EU’s regulation of largely American businesses. Republicans weren’t happy. The House of Representatives’ Committee on Oversight and Accountability opened an official inquiry in August. Senator Ted Cruz accused the FTC of “colluding with foreign governments” by helping the EU to “target” American businesses.

According to Gulotty, the regulations affecting targeted advertising and disinformation would affect domestic politics; specifically, Republican campaigns. “The idea that there’d be government checks on disinformation—for him, that’d be literally targeting his own campaign and the campaign of his allies.” The FTC declined to comment on this story.

Most companies that the act targets are American companies, not European ones, and the letter announcing the inquiry cites losses of $22 to $50 billion in new compliance and operation costs. Large sections of the act are designed to protect human rights. It prevents amplification of hate speech and propaganda, moderates content for pornographic material, and aims to prevent racial and gender biases, according to Sherilyn Naidoo, legal and policy adviser for Amnesty Tech.

Woolley also believes changes made to follow EU regulations may eventually be rolled out to American consumers; however, it’s a fine line, he said. Companies will have to weigh consumer protections and safety against freedom of speech regulations. “They’re really trying to thread the needle on this,” Woolley said, “because they built these platforms…without really thinking about how they would become, or without perceiving or having foresight about how they would be used in the marketplace of ideas and for the spread of news.”

But many US social media companies have actually gone backward on regulating misinformation over the past few years. Following Elon Musk’s takeover of Twitter, now known as X, companies like YouTube and Meta have slowed the labeling and removing of political misinformation and election denialism, and Meta now offers the ability to “opt out” of fact-checking services.

“They are almost gleefully embracing these calls for deregulation of content, content policing, content moderation that are being espoused by folks like Elon Musk,” said Woolley, “because it allows them to point to someone else as the blame, for why they’ve made decisions to move away from it.”

This isn’t the first time American companies have been impacted by European tech legislation. In May 2018, the EU passed the General Data Protection Regulation, meant to ensure that European citizens’ data be securely processed and handled. The GDPR applied to all countries dealing with EU data, regardless of their physical headquarters.

Countries around the world have passed similar regulations in years since. But not the US. “The idea in the United States that there should be an open marketplace of ideas through which the best ideas rise to the top is really important, and that there’s a multiplicity of ideas—that’s central to our conceptualization of democracy,” said Woolley. “In Europe, culturally speaking and legally speaking, they’ve had more of a stomach for generating policy that restricts people’s speech in order to protect other people’s safety.”

Popular

“swipe left below to view more authors”Swipe →But US technology and social media companies have been protected by Section 230 of the Communications Decency Act since its passing in 1996. “No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider,” reads the act.

These companies aren’t required to moderate that content, according to Roy Gutterman, director of the Tully Center for Free Speech at Syracuse University. In one recent Supreme Court case, Twitter, Inc. v. Taamneh, the court ruled against relatives of ISIS victims, saying that platforms hosting, displaying, and recommending content posted by terrorists intended to recruit others to their cause were not aiding and abetting terrorism.

The power social media companies hold in the policymaking space is immense. Facebook is expected to bring in $21.4 billion in profits in 2023. They’re “incalculably powerful,” according to Woolley. He said he’s spoken to regulators and policymakers from smaller countries who feel they have to bend to companies’ wills to do business in other sectors; these accused monopolies’ lobbying forces and international power means their “hands are tied.”

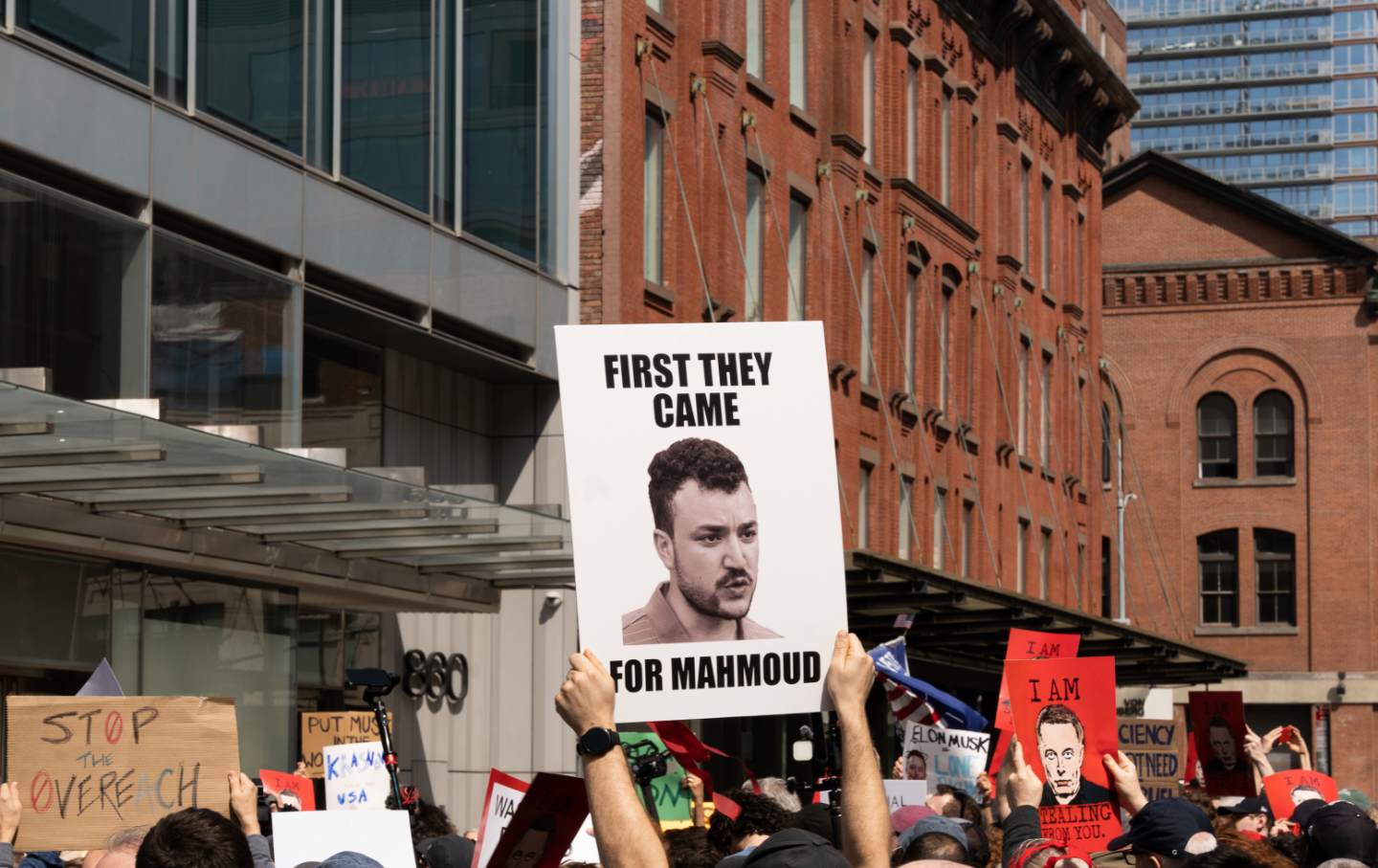

In the EU, though, X is facing real trouble. Misinformation and hate speech have spread since Elon Musk took over as owner, with X reportedly having the highest ratio of disinformation of any major social media platform. As a result, the EU has issued Musk a warning to comply with DSA laws, which has reportedly made him consider making X unavailable in the region.

In the lead-up to the DSA’s full passage, some companies are trying to challenge the scope of regulatory power. In September, Apple and Microsoft raised a challenge to the Digital Markets Act, arguing that their products iMessage and Bing, respectively, aren’t popular enough in EU markets to be included in the law’s coverage. Apple also plans to challenge the EU’s decision to put its App Store onto the EU’s antitrust list, according to Reuters.

Where does all this leave the United States? For any big change domestically, Gutterman said, we’d have to see a shift from passive platform use to active. Historically, American regulation tends to be hands-off, and social media companies would have to be creating their own content or actively facilitating the spread of information to find a “compelling interest” that would change the policy tune.

But the important part is that it can change, according to Amy Kristen Sanders, associate professor of media law in the University of Texas at Austin’s School of Journalism and Media. “Early on, here in the US, we made the decision when Congress passed section 230 of the Communications Decency Act that we were going to immunize platforms from the harm that the content on them cost,” Sanders said. “Perhaps that was the right decision then. I’m not sure it’s the right decision now.”

In 2022, Senators Richard Blumenthal and Marsha Blackburn cosponsored the bipartisan Kids Online Safety Act and reworked it in 2023. It aims to create online protections from exploitation and bullying, and would require platforms that kids use to protect their information and avoid age-restricted advertising.

But some senators have looked at the issue in a different way. There are currently two separate bipartisan bills aiming to create a regulating agency, similar to the Federal Communications Commission, for the new digital space. Through Senators Michael Bennet and Peter Welch’s Digital Platform Commission Act, the commission would create and enforce rules for digital platforms. Importantly, it would have the ability to name “systematically important” platforms, similar to the DSA’s “very large online platform” designation.

The other bill, sponsored by Senators Elizabeth Warren and Lindsey Graham, would create the Digital Consumer Protection Commission, an independent commission to enforce regulations about transparency, privacy, and national security and to promote competition.

But there are other challenges to Big Tech in America. Google is currently facing an antitrust lawsuit by the Department of Justice for allegedly paying tens of billions to tech companies like Apple in exchange for “default” search engine status. This makes it impossible for other search engines to compete, according to Microsoft CEO Satya Nadella.

Google’s trial is just one of a series of antitrust trials. Meta now faces legal action by the FTC over its acquisition of competitors like Instagram and emerging AI companies. The FTC also sued Amazon for monopolistic and anticompetitive practices. Earlier this month, The New York Times reported that the officials within the Justice Department could file an antitrust case against Apple this year over the “company’s strategies to protect the dominance of the iPhone.”

Actual regulation of these big companies in the US might eventually look similar to the DMA’s regulation on “gatekeepers,” or companies who provide a diverse enough product range to where they can promote their products across platforms; for example, Apple’s App store pushing Apple Maps over Google Maps.

So far, there’s been some progress with data privacy legislation at the state level. At the beginning of 2023, Colorado, Connecticut, Utah, and Virginia passed laws to protect personal information and sensitive data, joining California, whose trailblazing consumer privacy legislation passed in 2018. Federally, though, we’ve only seen a collection of hyper-specific privacy legislation—think HIPAA, COPPA, FERPA, etc. Advocates for data privacy argue that comprehensive legislation is necessary to stop data abuse. Fight For the Future, a nonprofit centered on digital rights, writes, “Banning one app, like TikTok, won’t prevent that. Only strong federal data privacy legislation can really address this without infringing upon the free expression of millions of social media users.”