As the Press Turns

Quick, pinch me--am I still living in the same country? Reading and watching the same media? This "Bob Woodward" fellow who co-wrote a tough piece in the May 18 Washingto...

Print Magazine

Purchase Current Issue or Login to Download the PDF of this Issue Download the PDF of this Issue

Quick, pinch me--am I still living in the same country? Reading and watching the same media? This "Bob Woodward" fellow who co-wrote a tough piece in the May 18 Washingto...

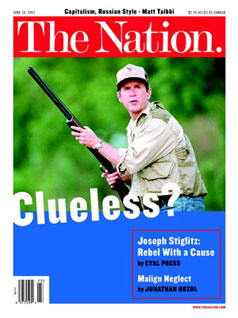

This soon-to-be-classic Ed Sorel cartoon is available only in our print edition. Sorry!

During the long months of post-September 11 presidential invincibility, no member of Congress climbed further out on the what-did-Bush-know-when limb than Representative Cynthi...

On May 2 the Senate, in a vote of 94 to 2, and the House, 352 to 21, expressed unqualified support for Israel in its recent military actions against the Palestinians. The re...

George W. Bush, it is true, did not create the FBI's smug, insular, muscle-bound bureaucracy or the CIA's well-known penchant for loopy spy tips and wrongheaded geopolitical an...

We join the city of Chicago in congratulating our friend and colleague Studs Terkel on his ninetieth birthday. By mayoral proclamation, May 16 was Studs T...

The question is not the 1970s cliché, What did the President know and when did he know it? The appropriate query is, What did US intelligence know--and what did the ...

Perhaps there's a limit to female masochism after all. To the great astonishment of the New York Times, which put the story on page one, Creating a Life, Sylvi...

For Senator Clinton to flourish a copy of the New York Post--the paper that has called her pretty much everything from Satanic to Sapphist--merely because it had the pung...

OK, so maybe John Ashcroft and Robert Mueller are not the sharpest tools in the shed. How else to explain that, after September.

British folk-rocker Billy Bragg has to be the only popular musician who

could score some airtime with a song about the global justice movement.

The first single from Bragg's

haven't done much mental spring cleaning because so much of the last month has been taken up with brooding and spewing about the crisis in the Middle East; no doubt t...

Almost everything that is wrong with Washington Post foreign editor David Hoffman's new book about Russia's transformation into a capitalist system, The Oligarchs...

As if the back streets of our local city

might dispense with their pyrrhic accumulation of dust and wineful

tonality,

offer a reprise of love itself, a careless l...

There is a difference it used to make,

seeing three swans in this versus four in that

quadrant of sky. I am not imagining. It was very large, as its

effect...

You may recall Insomnia as a Norwegian film made on a modest budget--do I repeat myself?--about the inner life of a morally compromised police detective. The picture enj...

Popular perception notwithstanding, the theory of natural selection was accepted by every serious evolutionist long before Darwin. Earlier scientists interpreted it as the clea...

Although Chicano identity has been Luis Valdez's theme since all but the earliest years of El Teatro Campesino, the guerrilla theater he founded in the 1960s, getting a clear s...

British folk-rocker Billy Bragg has to be the only popular musician who

could score some airtime with a song about the global justice movement.

The first single from Bragg's