In the “Year of Elections,” Is AI on the Ballot?

Politicians like Pakistan’s Imran Khan have found themselves duplicated by artificial intelligence—sometimes by their own parties.

On December 17, 2023, more than a million supporters of Imran Khan, the former prime minister of Pakistan, fired up their browsers to take part in Pakistan’s first ever virtual rally. Khan’s party, the Pakistan Tehreek-e-Insaaf (PTI), has been the subject of a brutal crackdown by the country’s powerful military establishment, after thousands of his supporters laid siege to military installations in opposition to his arrest last May. Toward the end of the nearly five-hour virtual event—which was made necessary by the restrictions placed on the party assembling in public—something seemingly inexplicable happened. Viewers were treated to a keynote speech by Khan himself, in spite of his being incarcerated in Adiala Jail. “You all must be wondering how I’m doing in prison,” he said. “The first thing I want to make clear is that if it brings Pakistan true freedom, living in jail for me is an act of worship.”

In actual fact, the voice was not that of Imran Khan. The digital media team of the PTI had trained an artificial intelligence program to mimic Khan’s speech patterns by feeding it a selection of his oratory, and then used the clone to deliver a speech based on notes smuggled out of prison by the PTI chairman’s lawyers.

“What we wanted to give the audience was the feeling that Imran Khan was there, but with the disclaimer that this was the AI voice of Imran Khan based on his notes from jail,” says Chicago-based Khan loyalist, Jibran Ilyas, who masterminded the initiative. “It helped us get his message across, and that’s the most important thing, because people are really looking at him to guide not just the PTI but Pakistan as a whole.”

In 2024, a year when it is estimated that more than 2 billion people worldwide will go to the polls to choose their representatives, policymakers, and strategists have begun to turn their eyes toward the possibility of artificial intelligence playing a decisive role in global democracy. Kat Duffy of the Council of Foreign Relations has described the current environment as a “post-market, pre-norms space,” one where emergent technologies have entered the marketplace but the regulation to control their output is yet to be determined.

Throughout South Asia, machine-learning and AI technologies have already begun to play a significant role in the democratic process. There is the now infamous case of Indian Prime Minister Narendra Modi, whose AI-cloned voice has been made to sing songs in a variety of regional languages he is known not to speak—an innovation that raises the possibility of the same being done to make his speeches accessible in parts of the country where Hindi is either not the first language or is poorly understood.

In Pakistan, commentators and digital media specialists have hailed PTI’s strategy as a significant milestone. “I thought it was a pretty effective way of circumventing the persecution that the political party has been facing,” says Islamabad-based digital rights activist, Usama Khilji. “Now you have a dude who’s in jail addressing everyone through AI because you can, so I thought it was pretty innovative.”

The recently concluded election in Bangladesh, however, became a case study in how artificial intelligence can be used to peddle disinformation, particularly among voters who lack the sophistication to differentiate between authentic audiovisual content and deepfake clones. In one instance, an AI-generated video posted on Facebook showed exiled opposition leader Tarique Rahman arguing that his political party, the BNP, ought to placate the United States by keeping quiet about the Israeli offensive in Gaza—a potentially disastrous position to take in a country where more than 90 percent of the population is Muslim.

A report by the Financial Times published last month uncovered a campaign of disinformation in Bangladesh, where pro-government news outlets were promoting deepfakes and other AI-created media to manipulate public opinion in the run-up to the January 7 general election. Sayeed Al-Zaman, a Bangladeshi academic whose research focuses on digital information, believes that “tools and techniques such as deepfakes, autogenerated content, and AI bots could become potent carriers of political propaganda” in Bangladesh and worldwide.

According to Al-Zaman, whose research indicates that over 60 percent of individuals exposed to online disinformation are susceptible to believing it, the advent of artificial intelligence has made the challenge of verification more difficult for two reasons. “Firstly, effective AI-based misinformation detection tools are not yet widely available, making it difficult for the masses to discern between fact and fiction,” he tells The Nation. “Secondly, digital information literacy offers limited assistance in cases of AI-based misinformation due to its heightened level of sophistication.”

Even in the United States, AI-generated content has been deployed in attempts to influence voters. On Monday, NBC News reported that a robocall featuring an AI voice clone of President Biden was being used to discourage New Hampshire residents from voting in its presidential primary. Last year, deepfake photographs surfaced on social media showing Donald Trump wrestling with police officers.

In November, social-media giant Meta announced that it would require political campaigns to disclose their use of artificial intelligence in advertisements published on all of its platforms, a policy meant to be rolled out this month. But at precisely the moment when the need for content moderation has reached its highest point, the tech sector is experiencing mass layoffs. Last year, Twitter instituted a number of cuts to its trust and safety team under the leadership of Elon Musk—a decision that was copied by a number of other tech giants including Meta and Amazon. All in all, the industry tracker Layoffs.fyi estimates that around 250,000 tech workers were let go in 2023.

The implications for the Global South are obvious; in a country like Pakistan, where 60 million people are classified as illiterate, the electorate is ripe for manipulation. But the danger of election interference is also considerable in affluent countries, where these AI technologies are at their most developed and sophisticated. It is not out of the question that 2024, which has been dubbed the year of the election, turns out instead to be the year of misinformation.

Take a stand against Trump and support The Nation!

In this moment of crisis, we need a unified, progressive opposition to Donald Trump.

We’re starting to see one take shape in the streets and at ballot boxes across the country: from New York City mayoral candidate Zohran Mamdani’s campaign focused on affordability, to communities protecting their neighbors from ICE, to the senators opposing arms shipments to Israel.

The Democratic Party has an urgent choice to make: Will it embrace a politics that is principled and popular, or will it continue to insist on losing elections with the out-of-touch elites and consultants that got us here?

At The Nation, we know which side we’re on. Every day, we make the case for a more democratic and equal world by championing progressive leaders, lifting up movements fighting for justice, and exposing the oligarchs and corporations profiting at the expense of us all. Our independent journalism informs and empowers progressives across the country and helps bring this politics to new readers ready to join the fight.

We need your help to continue this work. Will you donate to support The Nation’s independent journalism? Every contribution goes to our award-winning reporting, analysis, and commentary.

Thank you for helping us take on Trump and build the just society we know is possible.

Sincerely,

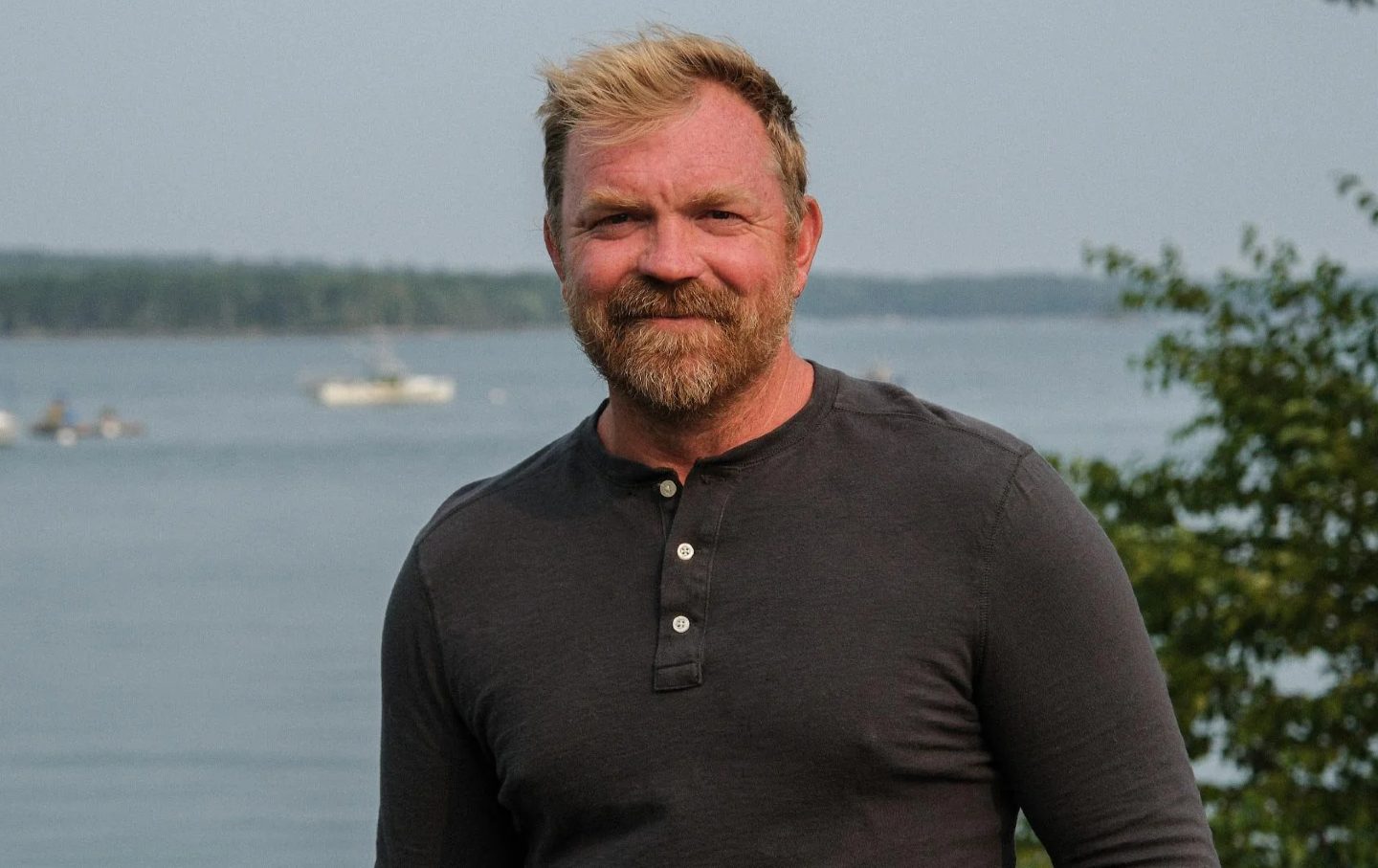

Bhaskar Sunkara

President, The Nation