The Court’s Terrible Two

Saving the worst for last, on the final day of the term the Supreme Court issued 5-to-4 rulings on school vouchers and drug testing that blow a huge hole in the wall of church-...

Print Magazine

Purchase Current Issue or Login to Download the PDF of this Issue Download the PDF of this Issue

Saving the worst for last, on the final day of the term the Supreme Court issued 5-to-4 rulings on school vouchers and drug testing that blow a huge hole in the wall of church-...

The country is riven and ailing, with a guns-plus-butter nuttiness in some of its governing echelons and the sort of lapsed logic implicit in the collapse of trust in money-cen...

SEC chairman Harvey Pitt lurches from lapdog to bulldog, threatening CEOs with jail time if their corporate reports mislead. George Bush demands "top floor" accountability. Rep...

It was bad enough that the Bush Administration co-opted the Children's Defense Fund slogan "Leave No Child Behind." Then the most famous former board member of CDF, Hillary Rod...

When the New York City Board of Education called on public schools to bring back the Pledge of Allegiance in the wake of 9/11, my daughter, a freshman at Stuyvesant High, th...

The essential case for the abolition of capital punishment has long been complete, whether it is argued as an overdue penal reform, as a shield against the arbitrary and the irr...

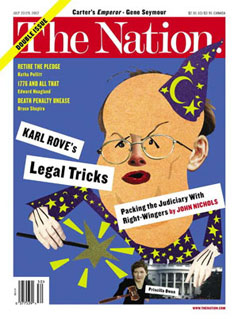

Vice President Dick Cheney has spent most of the past year in hiding, ostensibly from terrorists, but increasingly it seems obvious that it is Congress, the Securities and Exc...

For President Bush to pretend to be shocked that some of the nation's top executives deal from a stacked deck is akin to a madam feigning surprise that sexual favors have been...

Last week, while Bush spoke to Wall Street about corporate malfeasance, he was beset by questions about the timing of his sale of stock twelve years ago while he served as a d...

Guerrilla Radio, published by NationBooks, is the remarkable story of B92, a Belgrade radio station founded in 1989 by a group of young idealists who simply wante...

Much as I hate to, I'm going to start by talking about the damn money. I'm only doing it because almost everyone else is.

It's not just the author profiles and publi...

In Steven Spielberg's latest picture, a skinheaded psychic named Agatha keeps challenging Tom Cruise with the words, "Can you see?" The question answers itself: Cruis...

The country is riven and ailing, with a guns-plus-butter nuttiness in some of its governing echelons and the sort of lapsed logic implicit in the collapse of trust in money-cen...

One of the most persistent myths in the culture wars today is that social science has proven "media violence" to cause adverse effects. The debate is over; the evidence is o...

A half-century ago T.H. Marshall, British Labour Party social theorist, offered a progressive, developmental theory for understanding the history of what we have come to call c...